Battery Particle Segmentation

Highlights

Details

Adapting foundation segmentation models to dense, non-describable particle imagery using semi-automatic labeling and parameter-efficient fine-tuning.

Problem

Segmenting particles in scanning electron microscopy (SEM) images is challenging due to dense object packing, irregular particle shapes, and the absence of clear semantic boundaries.

While the Segment Anything Model (SAM) generalizes well to describable objects, it struggles on non-describable, texture-dominated domains such as particle microstructures.

Manual instance-level annotation for such data is prohibitively time-consuming, motivating a workflow that combines automatic mask generation, human-in-the-loop correction, and efficient model adaptation.

Overview

This project followed a three-stage pipeline:

- Generate initial instance masks using the SAM Mask Generator

- Refine masks through manual removal and correction

- Fine-tune SAM using LoRA to adapt it to particle imagery

The goal was to improve both mask quality and instance separation without full model fine-tuning.

Data and Label Generation

Initial annotations were produced using the SAM Mask Generator, which proposes instance masks by sampling a dense grid of points across the image.

Key steps:

- Default SAM mask generator parameters were first applied

- Hyperparameters (IoU threshold, stability score, minimum area) were tuned to reduce over-segmentation

- Generated masks were indexed and visually inspected

- Incorrect, merged, or spurious masks were manually removed

- Missing particle regions were added where necessary

This semi-automatic process produced high-quality image–mask pairs suitable for supervised fine-tuning.

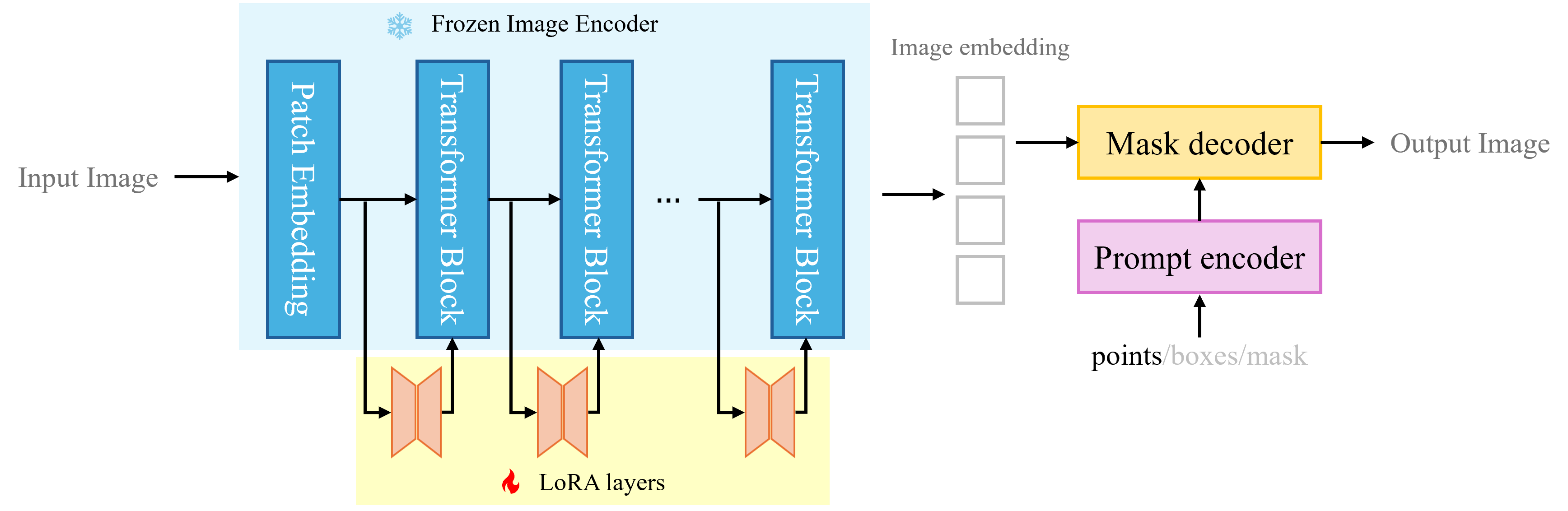

Model Adaptation with LoRA

Rather than fully fine-tuning SAM, Low-Rank Adaptation (LoRA) was used to achieve parameter-efficient domain adaptation.

Design choices:

- The image encoder was frozen to preserve general visual representations

- LoRA layers were inserted into transformer blocks of the encoder

- Only the low-rank adapter parameters were updated during training

- Prompt and mask decoders remained unchanged

This strategy enables near full fine-tuning performance with significantly fewer trainable parameters and no added inference latency :contentReference[oaicite:2]{index=2}.

Training Setup

- Dataset size: 243 SEM images

- Augmented samples: 5,820 image–mask pairs

- Input resolution: 512 × 512

- Train / validation / test split: 70% / 20% / 10%

- Loss function: Dice + Cross-Entropy

- Optimizer: Adam with weight decay (0.01)

- Learning rate: 1e-4

- Batch size: 8

- Training epochs: 40

- Experiment tracking: Weights & Biases

Results

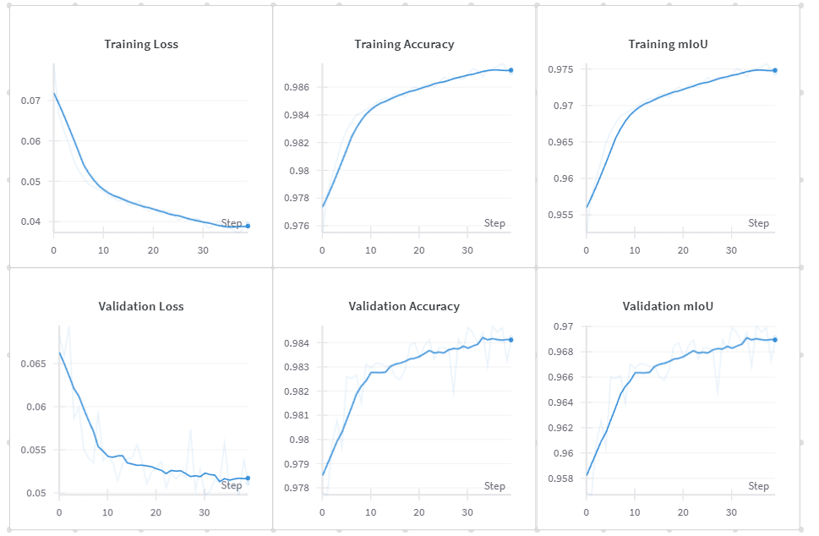

Quantitative Performance

- Average test Dice score: 96.14

- Significant improvement over the base SAM mask generator

- Stable convergence across training and validation splits

Training and validation curves showing stable optimization and consistent gains in accuracy and mIoU.

Qualitative Improvements

- Improved instance separation in densely packed regions

- Reduced under- and over-segmentation

- More stable mask boundaries across scales

- Clear improvement in SAM predictor outputs after adaptation

Across multiple test cases, LoRA-adapted SAM improved Dice scores from the high-80s to mid-90s, with the largest gains observed in dense particle clusters :contentReference[oaicite:3]{index=3}.

Ablation Insights

- Increasing LoRA rank improves performance up to a point

- Rank 512 achieved the best trade-off between accuracy and parameter count

- Higher ranks provided diminishing returns while increasing training cost

- Optimal learning rate was 1e-4; both higher and lower rates degraded performance

Notes and Lessons Learned

- Foundation models require explicit domain adaptation for non-describable imagery

- SAM Mask Generator is effective for bootstrapping labels but insufficient alone

- Human-in-the-loop correction dramatically improves downstream performance

- LoRA provides a practical balance between adaptability and efficiency

- Encoder-side adaptation is more impactful than decoder-side tuning for dense texture domains

This project informed later work on memory-augmented segmentation, guided adaptation, and efficient fine-tuning of large vision models.