Temperature Super Resolution

Highlights

Results

Details

Learning-based super resolution of thermal images to reconstruct high-resolution temperature fields from low-resolution sensor data.

Problem

Thermal imaging systems often trade spatial resolution for cost, frame rate, or hardware constraints.

As a result, low-resolution temperature maps lack the spatial detail required for accurate analysis, monitoring, or downstream decision-making.

The objective of this project was to reconstruct high-resolution temperature distributions from low-resolution thermal inputs, while preserving both global structure and localized temperature gradients.

Key challenges:

- Strong information loss in low-resolution inputs

- Smooth but spatially complex temperature fields

- Sensitivity to noise and illumination artifacts

- Need for physically plausible reconstructions, not just visually sharp outputs

Approach

Data

- Paired low-resolution and high-resolution thermal images

- Total samples: 9,864 image pairs

- Resolutions:

- Low-res: 120 × 160 (reshaped from 122 × 160)

- High-res: 480 × 640

- Train / validation / test split: 80% / 10% / 10%

- Augmentation applied only to training data:

- Flip, rotation, shift, scale

- Elastic deformation

- Brightness, contrast, gamma

- Hue saturation and noise

Model

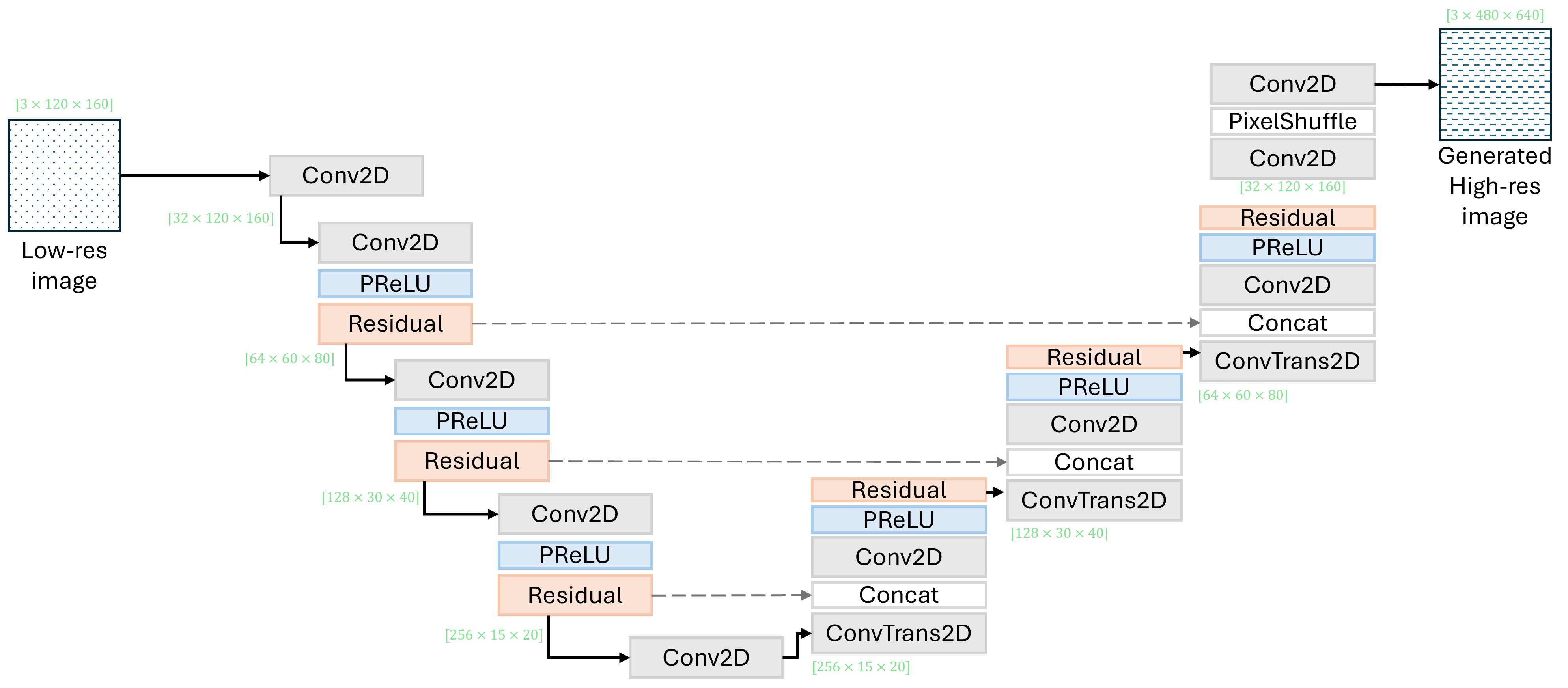

- Residual U-Net inspired by SRResUNet

- Encoder–decoder structure with:

- Residual blocks and PReLU activations

- Multi-scale feature aggregation via skip connections

- Upsampling performed using PixelShuffle, avoiding checkerboard artifacts common in transposed convolutions

- Final output reconstructs full-resolution temperature maps

Training

- Loss function: L2 reconstruction loss

- Optimizer: Adam

- Learning rate: 1e-4

- Batch size: 32

- Training duration: 100 epochs

- Experiment tracking using Weights & Biases

Evaluation

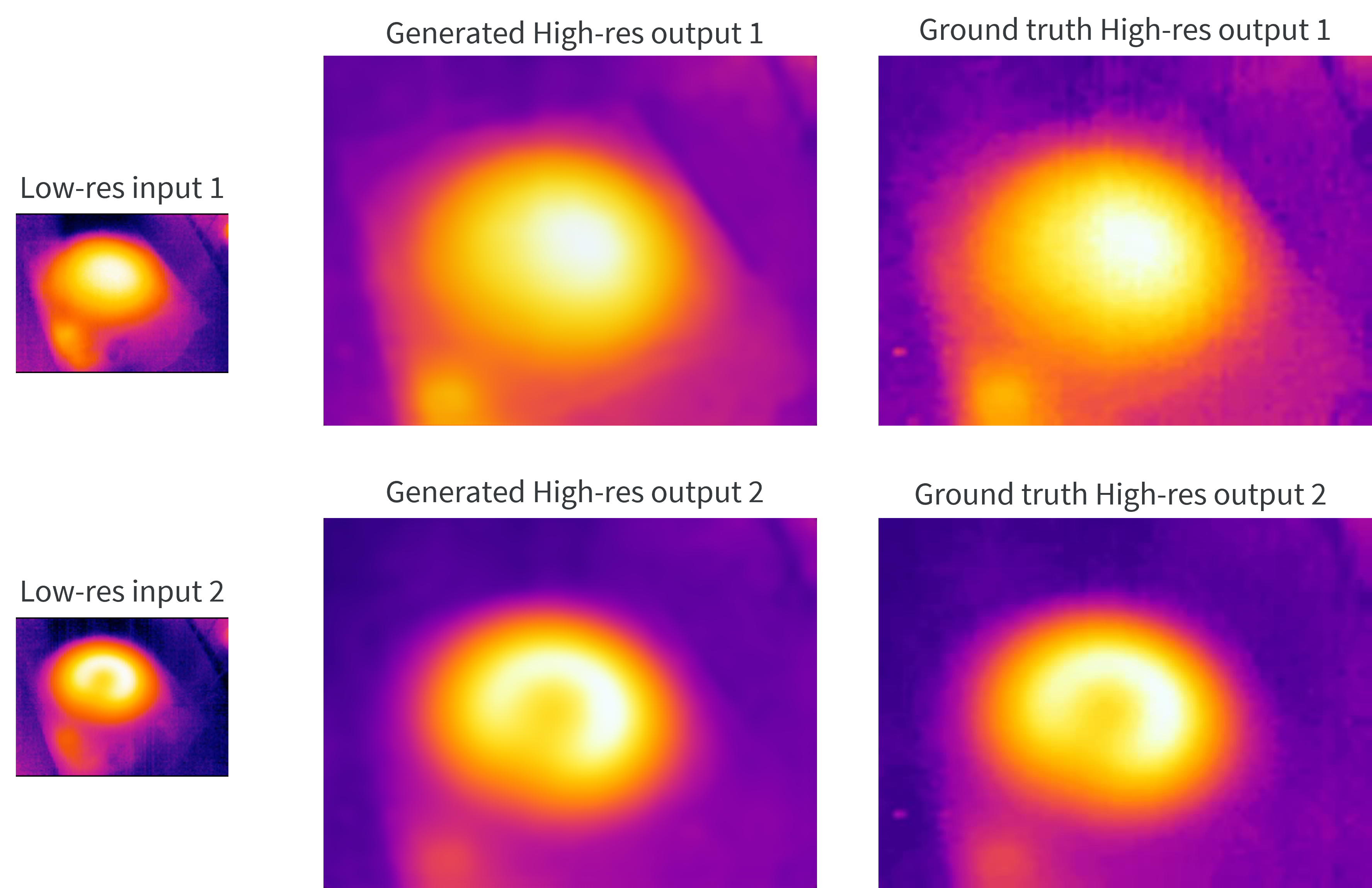

- Reconstruction loss evaluated on validation and test splits

- Final losses:

- Training: 0.0114

- Validation: 0.0146

- Test: 0.0139

- Qualitative evaluation focused on:

- Preservation of temperature gradients

- Structural consistency with ground truth

- Absence of hallucinated artifacts

Notes & Lessons Learned

- Residual connections are critical for stable convergence in thermal super resolution

- PixelShuffle upsampling produced smoother and more physically plausible outputs than naïve transposed convolutions

- L2 loss was sufficient due to the smooth nature of temperature fields; perceptual losses were unnecessary

- Data augmentation significantly improved generalization, especially under limited sensor variability

- Visual realism must be evaluated alongside numerical loss for safety-critical thermal applications

This project reinforced the importance of architecture choice and data quality when applying super-resolution techniques to physically grounded imaging modalities.